Docker网络模式深度解读

Docker网络模式深度解读

# Docker的网络模式介绍

docker默认提供4种网络模式:

- host模式:使用 --net=host 指定。

- none模式:使用 --net=none 指定。

- bridge模式:使用 --net=bridge 指定,默认设置。

- container模式:使用 --net=container:NAME_or_ID 指定。

可以通过命令 docker network ls 查看

NETWORK ID NAME DRIVER SCOPE

2e3311e3fd75 bi bridge local

04a7022e57fd bridge bridge local

207a0c0b75b6 game bridge local

f6be0ad892a5 host host local

7760fc2ba11d none null local

2

3

4

5

6

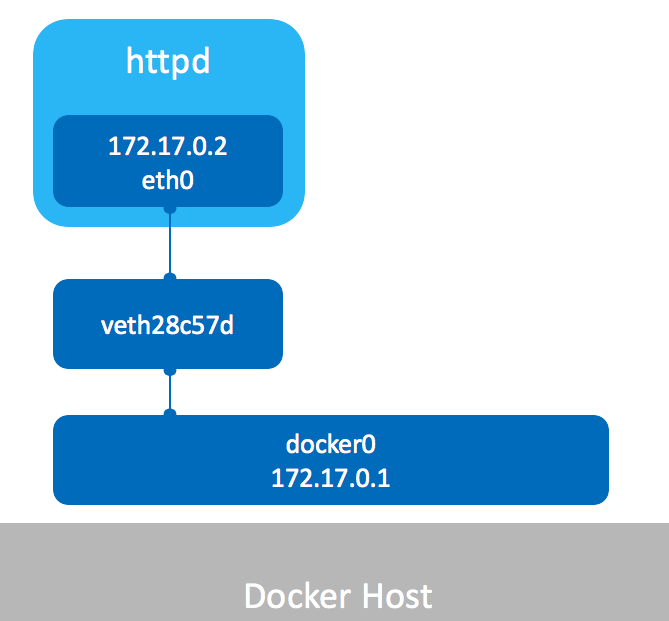

# bridge桥接模式

桥接模式是docker默认的网络模式。当docker服务启动后,会创建一个名字叫docker0的虚拟网桥,然后选一个与宿主机不一样的网络ip地址以及子网分配给docker0。

dev@devops:~$ brctl show docker0

bridge name bridge id STP enabled interfaces

docker0 8000.0242fc6483f2 no

2

3

另外每创建一个容器就会新增一个容器网卡,然后以桥接方式架到docker0网桥中,docker0会以NAT地址转换的方式通过宿主机的网卡,从而与公网进行通信。

如下图所示:

下面进行测试演示:

下面进行测试演示:

- 启动docker服务后,通过命令 ip addr 发现docker0网卡ip为172.17.0.1

9: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:fc:64:83:f2 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

2

3

4

5

- 启动一个docker容器 docker run -itd redis /bin/bash ,再进行一次 ip addr, 发现新增了一个虚拟网卡veth6e7c4ad@if157

158: veth6e7c4ad@if157: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ee:36:2b:3b:df:7c brd ff:ff:ff:ff:ff:ff link-netnsid 40

2

- 使用命令 brctl show 查看一下桥接情况,可以看到容器的网卡桥接到docker0上

docker0 8000.0242fc6483f2 no veth6e7c4ad

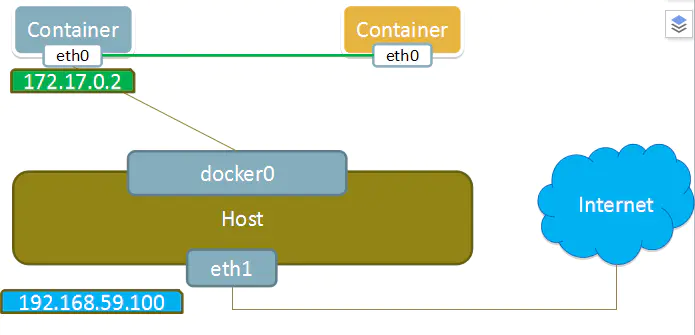

# host主机模式

主机模式是指docker容器与公网通信时使用的是宿主机的ip与端口,同时容器自己不会有ip地址,所以在这模式下容器与宿主机之间并没有隔离很分明。

在容器启动命令时用参数--net=host指定当前容器网络模式 docker run -d --net=host mongo

dev@devops:~$ nc 127.0.0.1 27017

dev@devops:~$ netstat antp | grep 27017

tcp 0 0 localhost:45624 localhost:27017 TIME_WAIT

2

3

这时外界要访问容器中的应用,则直接使用{host0.ip}:port即可,不用任何NAT转换,就像直接跑在宿主机中一样。但是,容器的其他方面,如文件系统、进程列表等还是和宿主机隔离的。

# container网络模式

Docker网络container模式是指,创建新容器的时候,通过--net container参数,指定其和已经存在的某个容器共享一个 Network Namespace。

用--net container:xx参数,指定该容器的网络模型为container模式,和xx容器共用相同的网络命名空间。

dev@devops:~$ docker run -d --name redis --net container:ga-test redis

c5b1febc0b20965f2339557d3d92e5558f2a894a0b8f8546f788620b5e0588eb

2

# none无网络模式

无网络模式下相当于容器处于断网状态下,同样没有自己的ip地址。 创建容器时通过参数 --net=none 设置,这比较少使用。

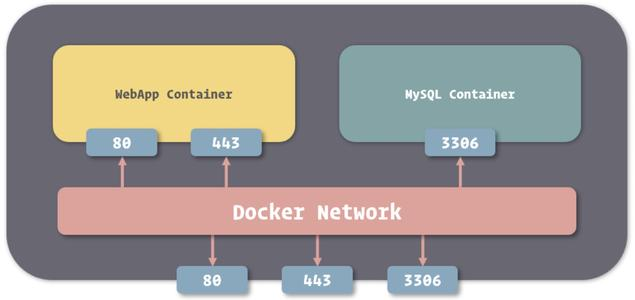

# Docker的内部通信

在实际的项目环境中,肯定会存在多个容器之间通信对情况,举个例子,例如多个服务访问一个mysql数据库。通常只需要在多个服务之间配置数据库的地址就可以了,它们都是通过默认的bridge进行通信的。

现在启动两个服务centos01和centos02

[root@localhost ~]# docker run -itd --name=centos01 mycentos:nettools /bin/bash

982a3d4cdb131bf33d217218c04aad278ac762376337e4180fd2eadc63599541

[root@localhost ~]# docker run -itd --name=centos02 mycentos:nettools /bin/bash

e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e

2

3

4

查看一下centos02的ip地址 docker inspect e1c43e2000915 ,发现是172.17.0.3。

[

{

"Id": "e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e",

"Created": "2020-02-18T08:17:13.893194133Z",

"Path": "/bin/bash",

"Args": [],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 19853,

"ExitCode": 0,

"Error": "",

"StartedAt": "2020-02-18T08:17:14.24517605Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:b92ca30f601cb7b594210e041eed8753aabb72dc5c2a18905b6272f5176ffdc0",

"ResolvConfPath": "/var/lib/docker/containers/e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e/hostname",

"HostsPath": "/var/lib/docker/containers/e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e/hosts",

"LogPath": "/var/lib/docker/containers/e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e/e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e-json.log",

"Name": "/centos02",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

"HostConfig": {

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {}

},

"NetworkMode": "default",

"PortBindings": {},

"RestartPolicy": {

"Name": "no",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"Capabilities": null,

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"KernelMemory": 0,

"KernelMemoryTCP": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": false,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/fe38b48216b0e036c8a594635f6392d0f06f5da202e695a7052aa36ca510ddc1-init/diff:/var/lib/docker/overlay2/0dd6db3d6d6ee7fc48b4e13654a8d4414b545834df6e1524475649f2d67454de/diff:/var/lib/docker/overlay2/c9ac4844a5f33fad6a906ae9b4b86fa9f058c7ed1048bffad5b7a4aca454b33b/diff",

"MergedDir": "/var/lib/docker/overlay2/fe38b48216b0e036c8a594635f6392d0f06f5da202e695a7052aa36ca510ddc1/merged",

"UpperDir": "/var/lib/docker/overlay2/fe38b48216b0e036c8a594635f6392d0f06f5da202e695a7052aa36ca510ddc1/diff",

"WorkDir": "/var/lib/docker/overlay2/fe38b48216b0e036c8a594635f6392d0f06f5da202e695a7052aa36ca510ddc1/work"

},

"Name": "overlay2"

},

"Mounts": [],

"Config": {

"Hostname": "e1c43e200091",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": true,

"OpenStdin": true,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/bin/bash"

],

"Image": "mycentos:nettools",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20191001",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "e8d1342d5dab4b15608ef4be8cacc83c30ca579c8ce676c39a91683da5e662b5",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {},

"SandboxKey": "/var/run/docker/netns/e8d1342d5dab",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "f335c5cfcf7110d793f546ac44cf18bfd079f990ad3820646149f2adc28be692",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:03",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "76342053278e2345a41515f3c5728095c5a97f6230f7a3edf5e41017c67a0a9f",

"EndpointID": "f335c5cfcf7110d793f546ac44cf18bfd079f990ad3820646149f2adc28be692",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:03",

"DriverOpts": null

}

}

}

}

]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

接下来进入centos01然后尝试ping一下172.17.0.3,发现是ping成功的

[root@localhost ~]# docker exec -it centos01 /bin/bash

[root@982a3d4cdb13 /]# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.154 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.105 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.121 ms

64 bytes from 172.17.0.3: icmp_seq=4 ttl=64 time=0.071 ms

64 bytes from 172.17.0.3: icmp_seq=5 ttl=64 time=0.070 ms

^C

--- 172.17.0.3 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 3999ms

rtt min/avg/max/mdev = 0.070/0.104/0.154/0.032 ms

[root@982a3d4cdb13 /]#

2

3

4

5

6

7

8

9

10

11

12

13

然后尝试ping一下centos02,发现是ping失败的

[root@982a3d4cdb13 /]# ping centos02

ping: centos02: Name or service not known

2

也就是说目前在默认bridge下是没法通过容器名称来进行通信的 回到宿主机查看一下bridge的信息,发现配置容器的相关网络信息。

[root@localhost ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "76342053278e2345a41515f3c5728095c5a97f6230f7a3edf5e41017c67a0a9f",

"Created": "2020-02-17T07:17:21.082636014+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"982a3d4cdb131bf33d217218c04aad278ac762376337e4180fd2eadc63599541": {

"Name": "centos01",

"EndpointID": "6d05788dc1e1ae12d6048879d5a0dc5012db828258e2de3ca91a7a1e1bc7cc33",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"e1c43e2000915b8256b1f0b6793b6f4bd1b2ff33ae1f32c6872ce21d4c5c8e4e": {

"Name": "centos02",

"EndpointID": "f335c5cfcf7110d793f546ac44cf18bfd079f990ad3820646149f2adc28be692",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

在docker中容器间直接通过ip进行服务访问是存在弊端的。假如上面例子中mysql的突然挂掉重启也失败,只能重新run一个。这时ip可能会发生变化,那就需要进去直接跟mysql通信的服务修改相关配置信息,就很不友好。 因此Docker也提供了基于容器名来与其它容器通信。

# 基于link实现单向通信

所谓单向通信是指只能单方面发起网络请求。例如一个功能服务请求mysql服务器,通常mysql服务器不需要访问功能服务。 同样启动两个服务进行模拟操作。一个模拟mysql,一个模拟tomcat。tomcat服务通过 --link mysql link到mysql服务中,记住,mysql服务要首先启动,不然会报错!

[root@localhost ~]# docker run -itd --name mysql mycentos:nettools /bin/bash

97ad026117718a0524eec1eb7db04a0dc3317b228b44abcc2b65a857919d8589

[root@localhost ~]# docker run -itd --name tomcat --link mysql mycentos:nettools /bin/bash

14c127543930d9c992f38514ba1b3db30a9e350272f41800e3e6870be5357450

2

3

4

接着进入tomcat容器中,通过容器名mysql使用ping命令

[root@localhost ~]# docker exec -it 14c12754393 /bin/bash

[root@14c127543930 /]# ping mysql

PING mysql (172.17.0.2) 56(84) bytes of data.

64 bytes from mysql (172.17.0.2): icmp_seq=1 ttl=64 time=0.109 ms

64 bytes from mysql (172.17.0.2): icmp_seq=2 ttl=64 time=0.118 ms

64 bytes from mysql (172.17.0.2): icmp_seq=3 ttl=64 time=0.140 ms

^C

--- mysql ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.109/0.122/0.140/0.015 ms

2

3

4

5

6

7

8

9

10

在网络中通过ping域名解析,无非就是通过本地hosts文件配置路由规则进行解析,还有就是通过DNS服务器解析 查看一下该hosts文件,发现配置了mysql容器的ip地址信息

[root@14c127543930 /]# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 mysql 97ad02611771

172.17.0.3 14c127543930

2

3

4

5

6

7

8

9

接下来进入mysql容器尝试ping一下tomcat容器,发现ping失败:

[root@localhost ~]# docker exec -it 97ad026117 /bin/bash

[root@97ad02611771 /]# ping tomcat

ping: tomcat: Name or service not known

2

3

也就是说通过--link实现单向通信,路由规则是通过改写hosts文件实现。

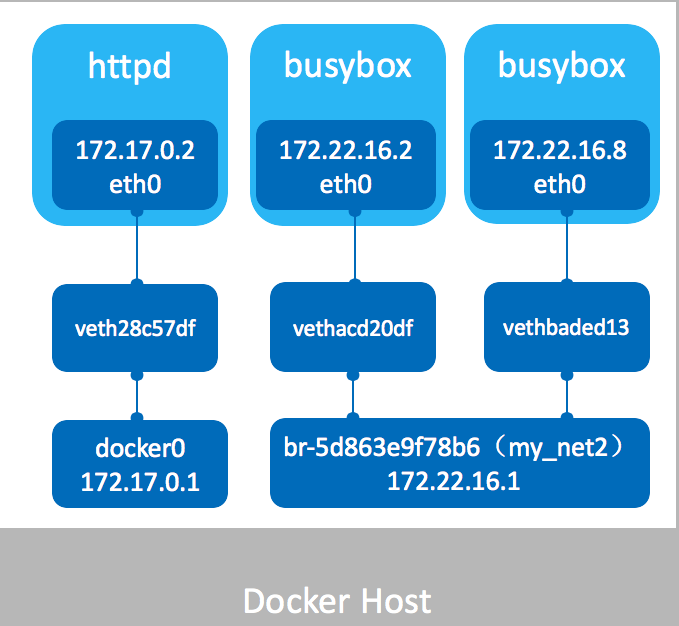

# 利用bridge网桥实现双向通信

从 Docker1.10 以后,docker daemon 实现了一个内嵌的 docker dns server,使容器可以直接通过“容器名”通信。不过不能使用默认bridge,需要自定义网桥。

1、创建一个新的网桥def_bridge:

[root@localhost ~]# docker network create -d bridge def_bridge

faeddc1fbfbf98f6572766003ff2cc27a023d43699fd994397013e09077c3784

2

2、启动两个容器名字分别为mysql和tomcat

[root@localhost ~]# docker run -itd --name mysql mycentos:nettools /bin/bash

6a9f1e9d0bb051ea31e5727193a1aed99b8039c2cde629fe2bd387def1abb5bd

[root@localhost ~]# docker run -itd --name tomcat mycentos:nettools /bin/bash

3e5950876d69712c3e2f8fd10268c9a21f1f21dd9937d1010ad2d5f709932831

2

3

4

3、然后将两个启动的容器都加入新增的网桥

[root@localhost ~]# docker network connect def_bridge mysql

[root@localhost ~]# docker network connect def_bridge tomcat

2

4、分别进入两个容器通过容器名互ping, 发现ping成功

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3e5950876d69 mycentos:nettools "/bin/bash" 6 minutes ago Up 6 minutes tomcat

6a9f1e9d0bb0 mycentos:nettools "/bin/bash" 6 minutes ago Up 6 minutes mysql

[root@localhost ~]# docker exec -it 6a9f1e9d0bb0 /bin/bash

[root@6a9f1e9d0bb0 /]# ping tomcat

PING tomcat (172.18.0.3) 56(84) bytes of data.

64 bytes from tomcat.def_bridge (172.18.0.3): icmp_seq=1 ttl=64 time=0.100 ms

64 bytes from tomcat.def_bridge (172.18.0.3): icmp_seq=2 ttl=64 time=0.057 ms

64 bytes from tomcat.def_bridge (172.18.0.3): icmp_seq=3 ttl=64 time=0.097 ms

64 bytes from tomcat.def_bridge (172.18.0.3): icmp_seq=4 ttl=64 time=0.060 ms

--- tomcat ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3002ms

rtt min/avg/max/mdev = 0.057/0.078/0.100/0.021 ms

[root@6a9f1e9d0bb0 /]# exit

exit

[root@localhost ~]# docker exec -it 3e5950876d69 /bin/bash

[root@3e5950876d69 /]# ping mysql

PING mysql (172.18.0.2) 56(84) bytes of data.

64 bytes from mysql.def_bridge (172.18.0.2): icmp_seq=1 ttl=64 time=0.040 ms

64 bytes from mysql.def_bridge (172.18.0.2): icmp_seq=2 ttl=64 time=0.067 ms

64 bytes from mysql.def_bridge (172.18.0.2): icmp_seq=3 ttl=64 time=0.055 ms

64 bytes from mysql.def_bridge (172.18.0.2): icmp_seq=4 ttl=64 time=0.130 ms

--- mysql ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3000ms

rtt min/avg/max/mdev = 0.040/0.073/0.130/0.034 ms

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

然后检查一下tomcat容器的hosts文件以及resolv.conf文件 发现hosts文件没有配置路由规则,而resolv.conf中配置了dns server 127.0.0.11

[root@3e5950876d69 /]# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 3e5950876d69

172.18.0.3 3e5950876d69

[root@3e5950876d69 /]# cat /etc/resolv.conf

nameserver 127.0.0.11

options ndots:0

2

3

4

5

6

7

8

9

10

11

12

也就是说通过自定义bridge双向通信是通过内置的dns服务实现IP地址的解析工作。